Whats up whats uppp!

Why you're getting this: I'm sharing some tools and approaches we use at Sidetool and personally. I think you'll find them useful.

Zero pressure to stick around, just click unsubscribe if this isn't for you.

Thanks to Miguel Jimenez for sharing this newsletter. Miguel is building Orbit Child, reimagining early learning and care for kids. Check them out at orbitchild.com.

By the way, I like to shout out everyone helping us grow. So if you share this with a friend and they subscribe, just let me know you're in the next one.

Something new this time:

I want to start sharing more than just tools and workflows.

There's a lot happening in AI right now. Every week something shifts. And I find myself constantly reading, testing, forming opinions on what matters and what doesn't.

So from now on, I'll also share things that are catching my attention. Not just what I'm using, but what I think is worth paying attention to.

The tools and workflows will keep coming. But now you'll also get the signal on what's happening out there, from our perspective.

Let's get into it.

What I've been using

Firecrawl MCP

I connected Firecrawl to Warp using their MCP server.

Now before I send any cold email, reach out to a new lead, or hop on a call with someone I don't know, I just ask Warp:

"Scrape this company's website and tell me what they do, who they serve, and what we could offer them."

10 seconds later I have context. Real context. Not a LinkedIn summary or a guess.

Warp already knows what Sidetool does. So it takes their website, compares it to what we offer, and comes back with a tailored angle. What they might need, how we could help, even draft copy for the outreach.

It changed how I prepare for conversations. Instead of spending 15 minutes clicking around their site and figuring out the angle, I get it instantly.

You could also automate this. Run it when a new lead comes in, when someone signs up, before every outbound email. But honestly, I just use it manually and it's already saving me hours.

MCP server: github.com/firecrawl/firecrawl-mcp-server

Cursor Rules

If you're using Cursor without a .cursorrules file, you're using maybe 30% of what it can do.

A .cursorrules file tells Cursor how to generate code for YOUR project. Your stack, your conventions, your patterns.

Instead of getting generic React code, you get code that matches your exact style guide.

Drop the file in your repo root. That's it.

Before: Cursor generates code, you spend 10 minutes fixing it to match your standards.

After: Cursor generates code the way you would write it.

If you want the cursor rules folder we use at Sidetool, reply to this email and I'll send it over.

What caught my attention this week

Google scared OpenAI

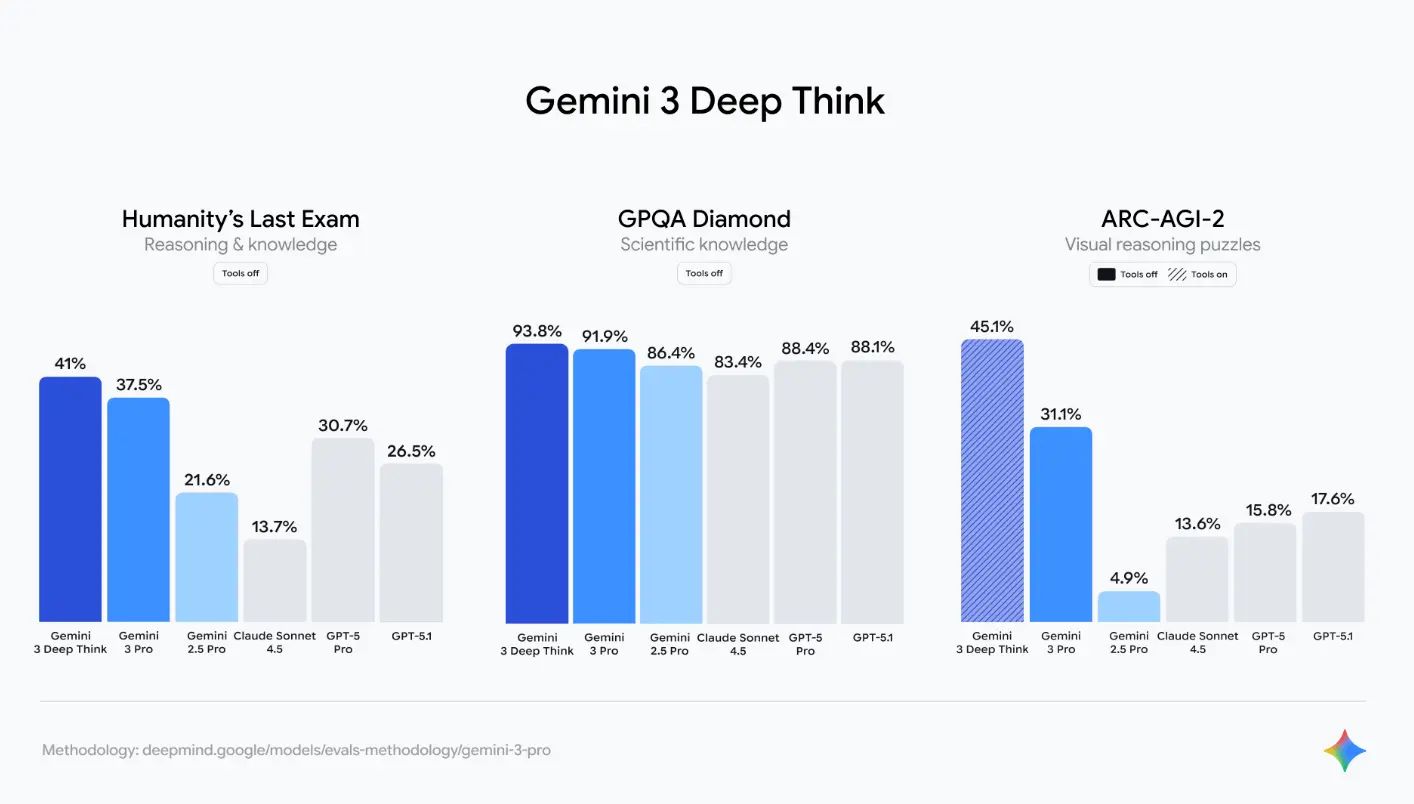

Google released Gemini 3 with Deep Think mode.

Their "strongest reasoning capabilities yet."

Sounds like every Apple keynote, except this time the benchmarks actually back it up.

The benchmarks are insane: 45% on ARC-AGI-2 (the hardest reasoning benchmark out there). For context, GPT-4o was at 5%.

OpenAI's response? Internal "Code Red."

They paused multiple projects—ads, new agents, a briefing service—to focus resources on catching up. There's reportedly a new model called "Garlic" in the works.

The interesting part: Google trained Gemini 3 almost entirely on their own TPUs. Everyone else depends on Nvidia. Google doesn't.

For the first time in a while, OpenAI is playing defense.

What this means for us:

This competition is great.

It means we get access to better models, faster. And it's becoming pretty clear that these companies have the capacity to build way better stuff than what they've been releasing.

They just… weren't. For whatever reason.

Now they have to.

I'm excited. Hopefully next week we'll have news from OpenAI or a new model drop. The race is on.

Which AI is the best? Let the AIs decide

I always question myself which model is best for what.

Turns out Andrej Karpathy had the same question, so he built an app that sends your prompt to multiple AI models (GPT-5.1, Gemini 3, Claude, Grok) at once.

They each answer, then review each other's responses anonymously and vote on the best one.

The twist: "The models consistently praise GPT-5.1 as the best and most insightful model, and consistently select Claude as the worst."

Which is funny because I personally use Claude a lot.

Karpathy himself said he prefers Gemini because GPT is "too wordy." So even the guy who built the thing disagrees with the AI consensus.

The whole thing is open source: github.com/karpathy/llm-council

Which model do you use the most? And why? Reply, I'm curious.

Claude Code in Slack

Anthropic just launched Claude Code inside Slack.

It reads the conversation, figures out which repo to use, posts progress updates in the thread, and opens a PR when it's done.

It's like having a developer on your team that you can just tag whenever you need something built.

This is a research preview for now, but if it works well, it could change how teams ship code. Less context switching, less ticket writing, just "hey @Claude, can you fix this?"

AI is changing how we work, and I'm here for it.

That's it for this week.

Two tools. Three things happening. Real signal.

If you try any of these, or have thoughts on what's happening, reply. I read everything.

-Ed

Did you enjoy this newsletter? Say thanks by checking out one of my businesses:

Liked this? Sign up here to get more.